Introduction

In the dynamic world of data analytics, legacy ERP systems often present unique challenges. These systems, rich in historical data, frequently lack direct access to their underlying data platforms outside the ERP environment. One common scenario involves exporting data from a legacy ERP system to Azure Synapse Warehouse for advanced analytics. However, the process isn’t always straightforward due to limitations imposed by the ERP system and the Synapse Warehouse.

In this blog post, we’ll delve into an innovative solution that harnesses the power of Docker, Azure SQL Database, and Azure Data Lake Gen2. By thinking outside the box, we can simplify the import process, reduce costs, and enhance data accessibility.

The Problem

Legacy ERP System Constraints: Our legacy ERP system stubbornly withholds direct access to its underlying data platform. Instead, it grudgingly provides a backup file (in .bak format) containing the precious data we seek.

Azure Synapse Warehouse Limitations: Alas, Synapse Warehouse refuses to accept .bak or .bacpac files directly. This limitation complicates our data migration dance.

The Solution

Our ingenious approach introduces an intermediary step using a temporary SQL Server instance hosted within a Docker container. Let’s break down the solution:

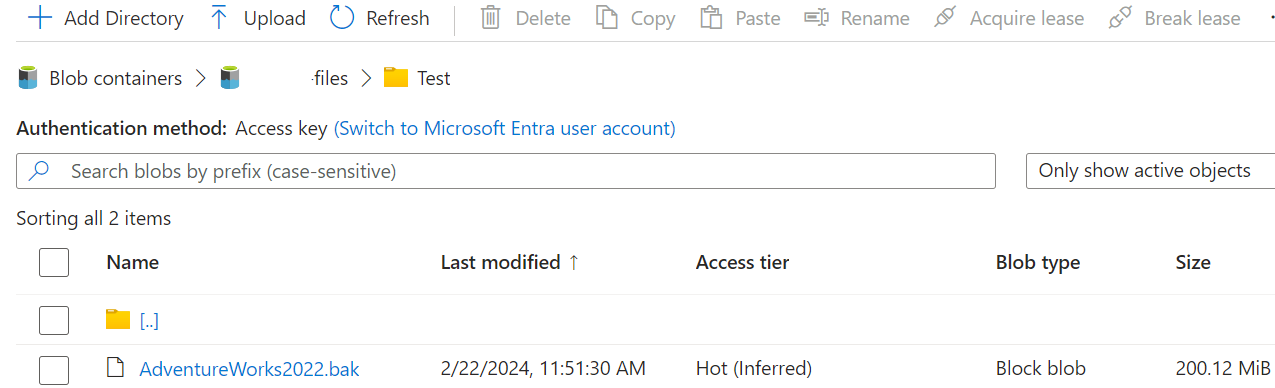

Step 1: Upload the .bak File

The legacy ERP system reluctantly generates a .bak file containing the data we crave.

We dutifully upload this .bak file to a designated path in Azure Data Lake Storage Gen2.

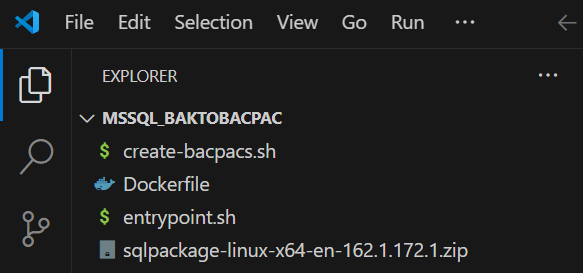

Step 2: Crafting the Docker Image

We roll up our sleeves and create a custom Docker image to serve as our temporary SQL Server instance. Here’s the recipe:

Base Image: We kick things off with the official SQL Server 2022 image from Microsoft’s container registry:

FROM mcr.microsoft.com/mssql/server:2022-latest

Installing Azure CLI:

We update the package list and install the necessary dependencies:

RUN apt-get update

RUN apt-get install -y ca-certificates curl apt-transport-https lsb-release gnupg

Next, we set up the Microsoft GPG key and configure the package repository:

RUN mkdir -p /etc/apt/keyrings

RUN curl -sLS https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | tee /etc/apt/keyrings/microsoft.gpg > /dev/null

RUN chmod go+r /etc/apt/keyrings/microsoft.gpg

RUN AZ_DIST=$(lsb_release -cs)

RUN echo $AZ_DIST

RUN echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/microsoft.gpg] https://packages.microsoft.com/repos/azure-cli/ $AZ_DIST main" > /etc/apt/sources.list.d/azure-cli.list

Installing Azure CLI:

We install the Azure CLI, mssql-tools, and sqlpackage:

RUN apt-get update

RUN apt-get install -y azure-cli mssql-tools

Step 3: Data Extraction and Transformation

The Docker container dutifully reads the .bak file from Azure Data Lake Storage Gen2.

It gracefully exports the data as a .bacpac file, a format compatible with Azure SQL Database.

Step 4: Orchestrating the Process

Entrypoint Script:

Our entrypoint script kicks off the SQL Server instance and executes the create-bacpacs.sh script file:

/opt/mssql/bin/sqlservr & ./create-bacpacs.sh

Create Bacpac Script:

We follow these steps within the create-bacpacs.sh script:

- Download the .bak file from Azure Data Lake Storage Gen2.

- Restore the database using SQL Server commands.

- Export the data to a .bacpac file.

Step 5: Deploy Docker Application to Azure

Push the Docker Image to Azure Container Registry:

Authenticate and push the custom Docker image to your Azure Container Registry:

docker login testregistry.azurecr.io --username testregistry --password <password>

docker tag new_image2 testregistry.azurecr.io/new_image2

docker push testregistry.azurecr.io/new_image2

Deploy an Azure Container App Job:

Create a Container App job from the Azure portal, using the Docker image stored in the registry.

Set environment variables ACCEPT_EULA=Y and MSSQL_SA_PASSWORD=<sql_server_password>.

Conclusion

By embracing Docker and thinking beyond the ordinary, we’ve streamlined the data import process. Our solution reduces complexity, trims costs, and ensures seamless data flow from legacy systems to Azure Synapse Warehouse. Remember, sometimes the most elegant solutions lie just beyond the traditional boundaries. Happy data wrangling.

Contact us or write to info@gain-insights.com to explore a hands-on session or for quick discussion of your requirements.